Shooting yourself in a foot with django and recovering from it

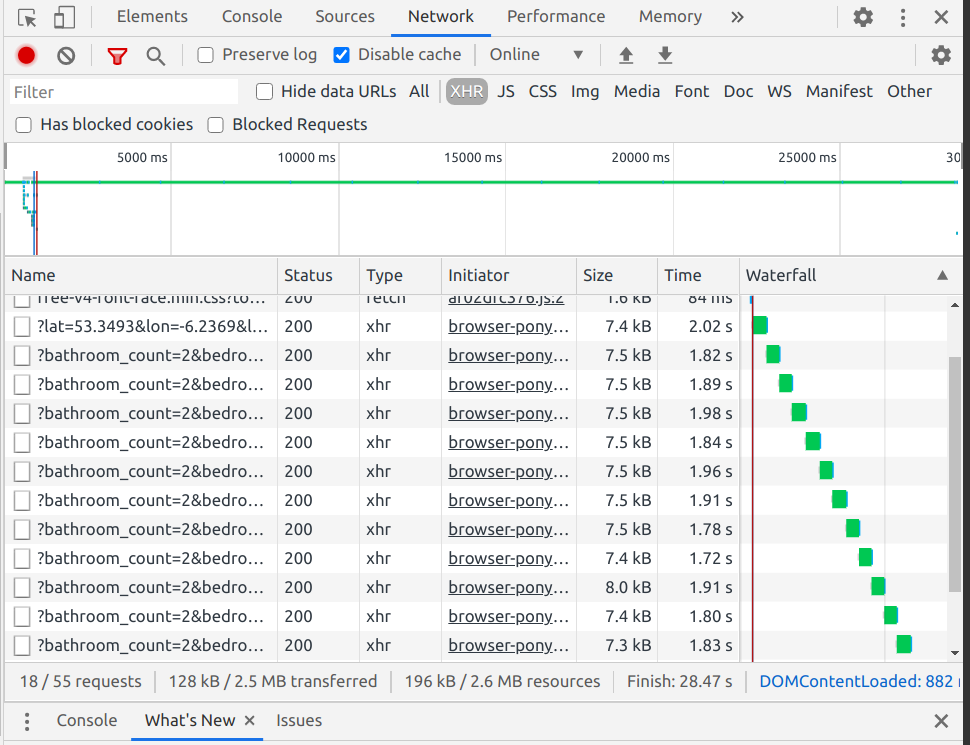

I have recently built an API (for my side project Redeal) that turned out extremely slow. I knew that what I was building wasn’t supposed to be super fast or optimal and I was building it with python and django which aren’t really known for their speed, but I haven’t expected such atrocious performance:

Yup, you are seeing it right, it was taking about 30 seconds for all the API calls finish. Each individual call was slow and there were also multiple of them. In this post I will tell you what I did to make it more than 200x faster.

The problem I was facing wasn’t something fundamental to the tools I was using. I didn’t have to rewrite my app to a faster language like Go, or to ditch my SQL database.

I was pretty sure that the slowness in this case was self inflicted.

Django is one of my go to tools for building any sorts of web apps. I love the batteries included, so that I don’t have to reinvent the wheel over and over. The downside is that sometimes it’s way to easy to misuse the solution and end up with the app being very slow.

Where to even start?

My first instinct when I see a problem is to come up with some ideas straight off the bat.

Here is something that crossed my mind:

Should I use async generators with concurrent promises to parallelize my API calls? Should I add backend caching?

It would probably be fun to implement, but this is the wrong approach to performance debugging and at best would optimize my API calls to take about 2 seconds. Which would be still way to slow for my standards. I believed the problem was on the backend side.

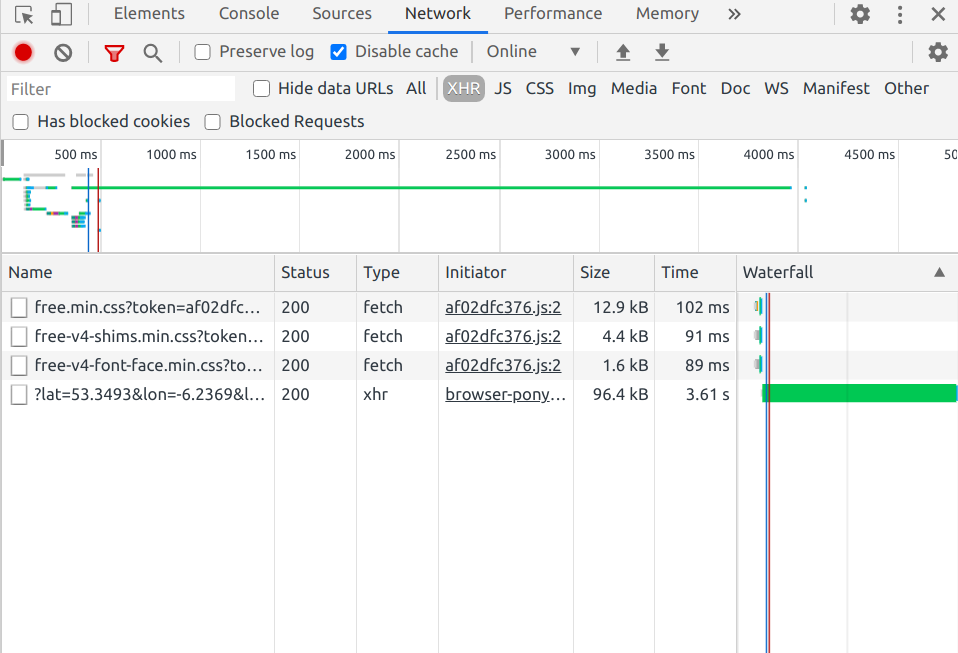

The reason behind why there were so many API calls was that I was using an API with pagination and I was fetching next pages after previous ones completed. To simplify debugging I decided to make my pages huge so that I would only need one API call.

Since I was using Django Rest Framework with the custom paginator class, it was pretty easy to override and I simplified my problem to just one big API call. I could have also change the pagination options from the JavaScript side, but since in this case I controlled both, I chose to make changes on the backend side.

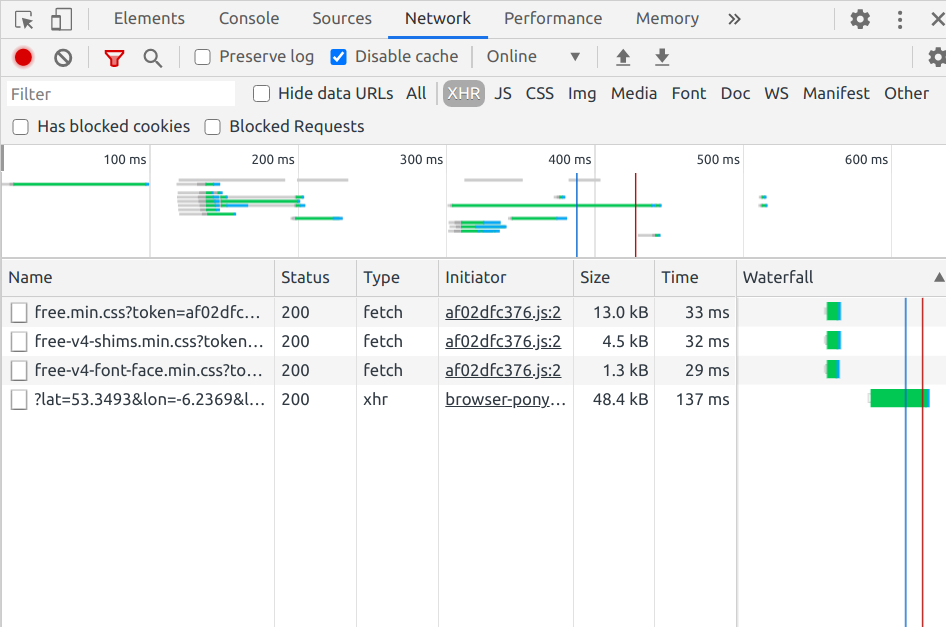

With the pagination change I ended up having just one slow request instead of many:

First hint: overall it was significantly faster than smaller but sequential requests.

Django Debug Toolbar

I usually start debugging performance of django web apps by looking at what Django Debug Toolbar can tell me. Django Debug Toolbar is a configurable set of panels that display various debug information about the current request/response and when clicked, display more details about the panel’s content. You can read how to set it up here.

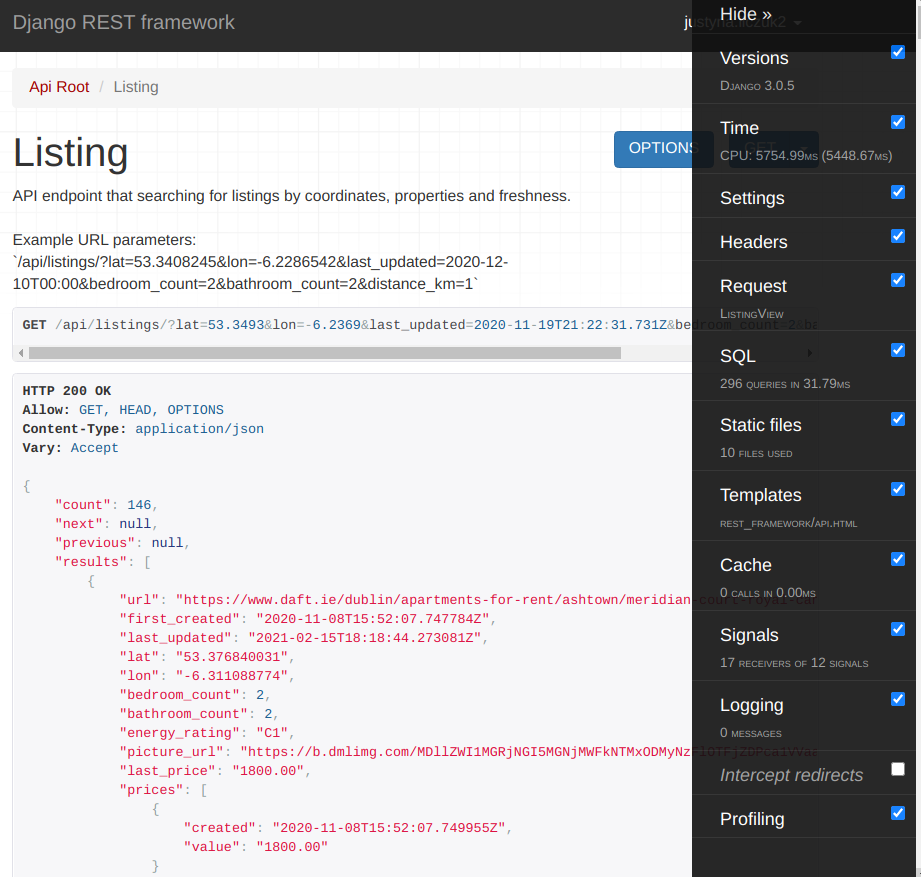

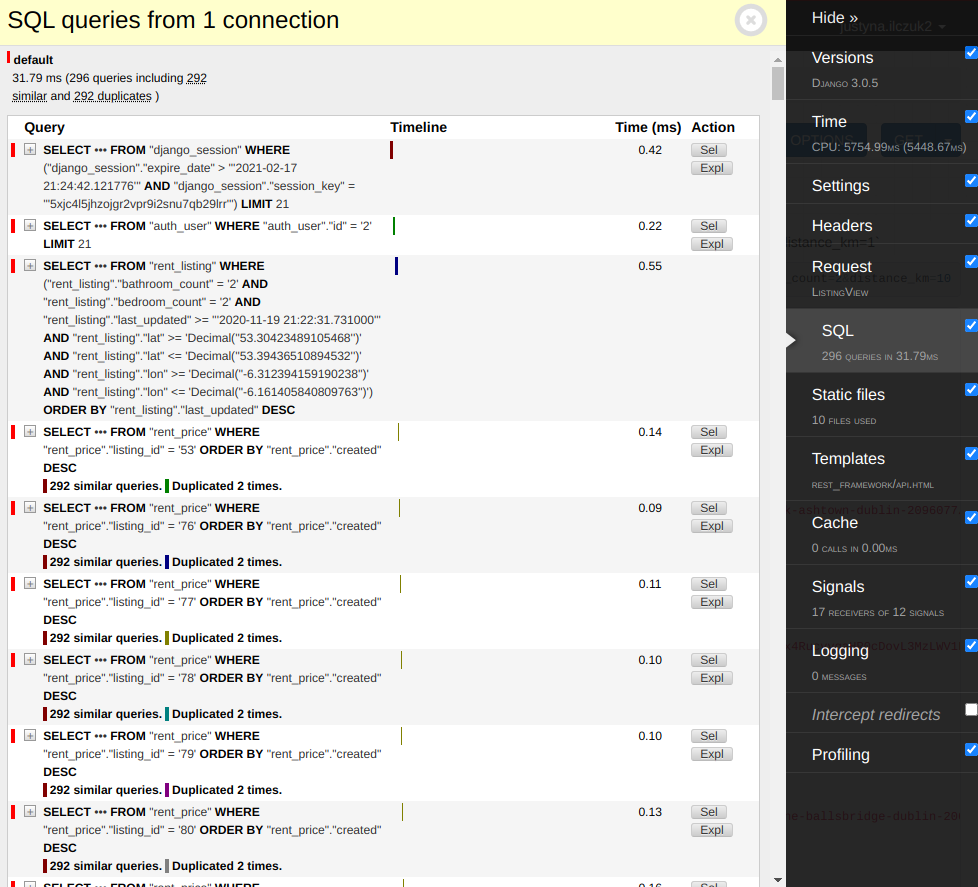

So, since now I had just a single, but very slow API call, I opened it in it’s own tab. Since I was using Django Rest Framework, the JSON was rendered nicely and I could also see the Debug Toolbar on the side.

The things that immediately got my attention:

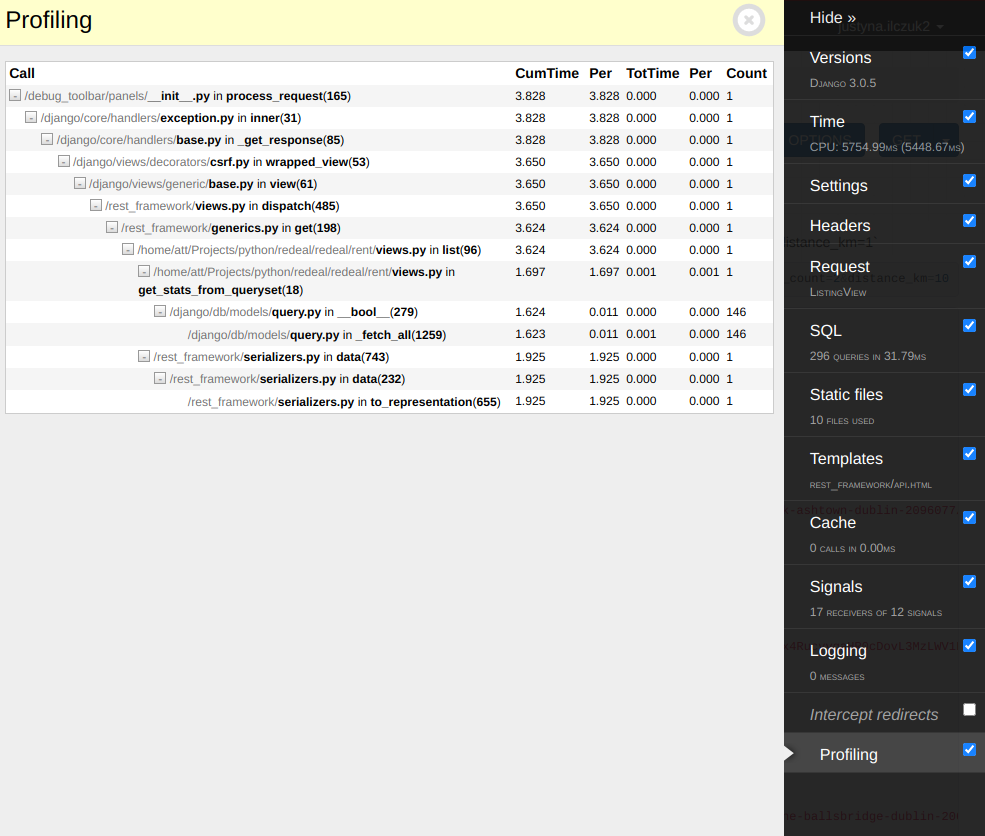

- Time (5s+)

- SQL (296 queries!) - each query individually was fast and

When you click at the navigation in the toolbar you can see more info in the panel.

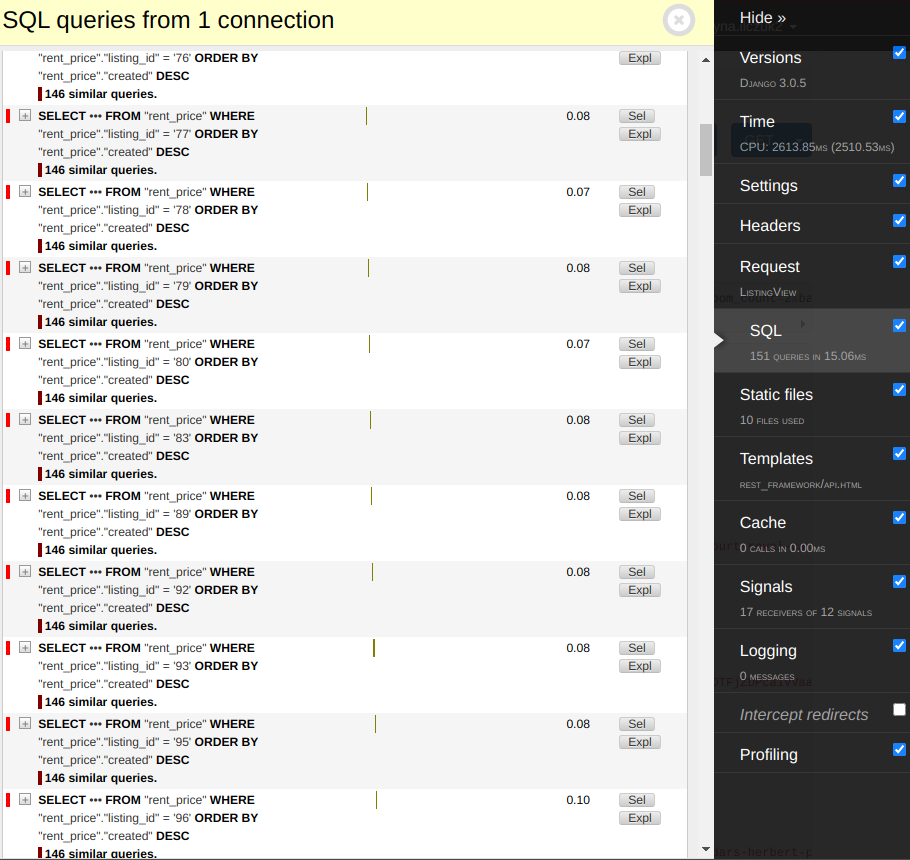

There I saw that there were extremely many similar to each other queries that were sequential and that they were taking a very long time, because of that.

There I saw that there were extremely many similar to each other queries that were sequential and that they were taking a very long time, because of that.

Another panel that I investigated was the Profiling panel that shows how long did functions in the call stack take.

The profiling panel pointed me to two problems:

get_stats_from_querysettaking 1.7srest_framework/serializers.pytaking 1.93s

Ouch.

Reason for the slowness

The truth is that I anticipated that the get_stats_from_queryset would be slow when I was writing it.

I knew that I would have to optimize it, because I was writing some fairly naive code. It was however

a bit surprising that the serializer code was also very slow.

Let’s dig into my specific problem. I’ve been using django ORM and my models where more less as follows:

class Listing(models.Model):

url = models.URLField(unique=True)

...

description = models.TextField()

class Price(models.Model):

listing = models.ForeignKey(Listing,

on_delete=models.CASCADE,

related_name='prices')

created = models.DateTimeField(auto_now_add=True)

value = models.DecimalField(

max_digits=10, decimal_places=2, null=True)

class Meta:

ordering = ['-created']

I’ve been tracking listings over time and I was maintaining the history of price changes.

What I also wanted in my API was price statistics for the subset of listings matching given criteria.

That was computed in the function get_stats_from_queryset which result would later be added to the response the

Django Rest Framework ListAPIView would return.

Here is the listing of how the records were prepared to later get statistics:

def get_stats_from_queryset(queryset):

stats = {}

# Within the queryset, map each property to the last price.

last_prices = []

for listing in queryset:

prices = listing.prices.order_by('-created')

if prices:

last_price = prices[0].value

last_prices.append(last_price)

...

And here is the simplified code of the serializers:

from rest_framework import serializers

class PriceSerializer(serializers.ModelSerializer):

class Meta:

model = Price

fields = ['created', 'value']

class ListingSerializer(serializers.ModelSerializer):

prices = PriceSerializer(many=True)

class Meta:

model = Listing

fields = ['url', ... 'description', 'prices']

So, this isn’t anything super special, two models with a ForeignKey 1 to Many relationship.

The majority of the slowness was coming from a deluge of simple queries that were coming from both the serializers and the custom function that computed the stats.

Making things fast

At this point, I knew that the best way to speed up this API would be to reduce the amount of SQL queries.

I have briefly considered just writing my SQL query from scratch, but it would be surprisingly complicated to do for selecting the value of the latest price (I have walked through it and there would be at least two joins necessary).

Luckily, this is not the new problem for the django users and django offers really good tools to optimize its ORM queries. You could use select related or prefetch related.

In this case I needed to use prefetch related, because I needed to fetch multiple related objects.

I made a small tweak to the get_queryset method in my view that looked like this:

class ListingView(generics.ListAPIView):

...

def get_queryset(self):

...

return queryset.filter(

... (my custom filters) ..

).prefetch_related('prices')

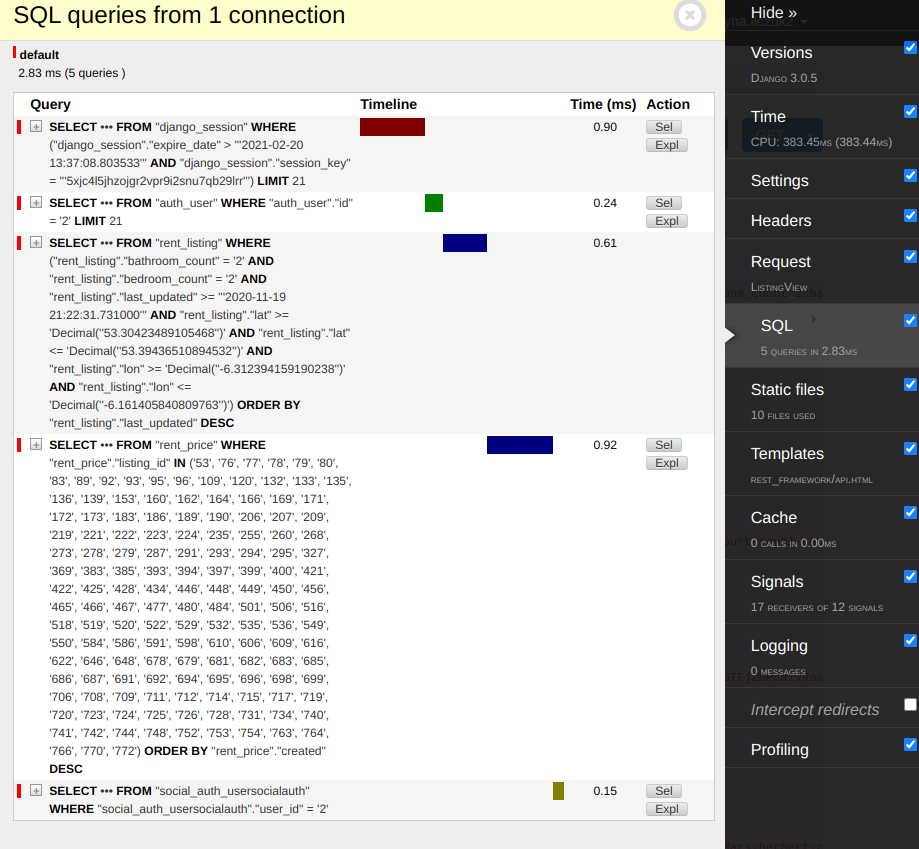

I navigated back to my django rest framework API page and checked the performance:

It improved significantly! It changed from 5.7s to ~2.5s. But it was still too slow. There were still too many queries!

The problem was that the get_stats_from_queryset was using listing.prices.order_by('-created') which had custom ordering and because of that

it wasn’t taking advantage of the prefetch!

This could be addressed in multiple ways.

- By using specifying the ordering in the

Meta

class Price(models.Model):

listing = models.ForeignKey(Listing,

on_delete=models.CASCADE,

related_name='prices')

created = models.DateTimeField(auto_now_add=True)

value = models.DecimalField(

max_digits=10, decimal_places=2, null=True)

class Meta:

ordering = ['-created']

(that I was already doing here)

- By using

myqueryset.prefetch_related(Prefetch('prices',

queryset=Price.objects.order_by('-created')))

When either one 1. or 2. is used and if we drop the order_by from prices (replacing it with prices = listing.prices.all()), the method starts

taking advantage of the prefetch_related while still remaining correct. You can both inspect the SQL queries to make sure and check if your unit tests still pass!

So, here we have it, now without the deluge of the SQL queries:

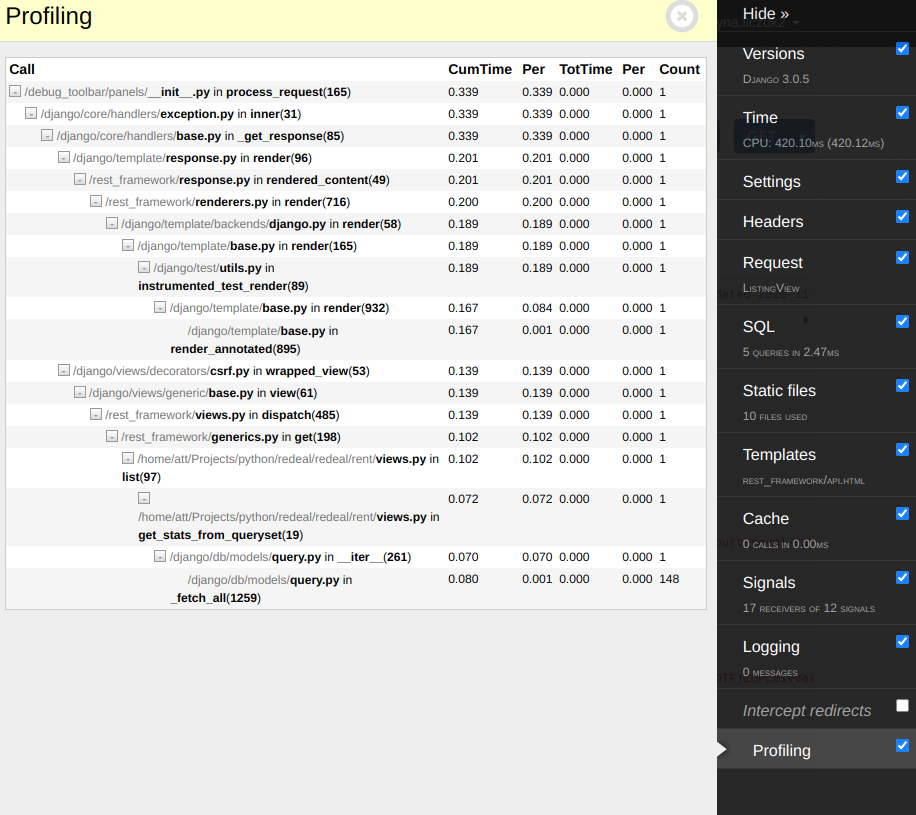

It’s still not very fast, overall the profile shows about 400ms to generate the response. Big chunk of that time comes from the rendering the fancy HTML view

from the Django Rest Framework that we can see on the following CPU profile:

When we request the API in the JSOn format it takes on average 230-250ms.

Making it even faster

Taking 250ms to fetch about 150 records and also compute a bunch of statistics about them it’s not a bad result. But in my mind it was still pretty slow. I further made it about 40-50% faster.

The thing was that I didn’t actually need all the prices in my API, I just needed the last price for each listing. With this mind, I denormalized my db

and added a new field called last_price on each listing, which would be added to each listing. That wouldn’t be a problem because I would generally create and update the listing and prices within one method and a single transaction.

I wrote a data migration in django that automatically filled in the new database field based on the prices.

https://docs.djangoproject.com/en/3.1/howto/writing-migrations/

My migration looked similar to:

from django.db import migrations, models

def fill_last_price(apps, schema_editor):

Listing = apps.get_model('myapp', 'Listing')

for listing in Listing.objects.all():

prices = listing.prices.order_by('-created')

if prices:

listing.last_price = prices[0].value

listing.save()

class Migration(migrations.Migration):

dependencies = [

('myapp', '0004_listing_last_price'),

]

operations = [

migrations.RunPython(fill_last_price),

]

With that I didn’t have to use prefetch_related and I also could simplify my serializers. It generally made my code quite a bit simpler and it was a useful tradeoff for me to make.

I went from about 30s of API calls to ~140ms.

Which is more than 200x speedup for the same exact functionality.

What went well

I found the issue in the development stage and not from the customer complaints. What helped here was that I was developing with a fairly realistic dataset. If instead of 150 records, I was using 3 in development I could have completely missed the issue. So the tip here would be to have good fixtures or development data.

The second thing that went well is that I used a disciplined approach to performance improvements starting with profiling. If I have jumped to some random idea like, concurrent promises, or even blindly applying caching I would end up with much worse results.

The last thing that I want to acknowledge is that django has a rich ecosystem and great tooling that made this process fairly easy. Yes, I shot myself in the foot at first. But django helped me recover. I would recommend any django enthusiast to use the django debug toolbar. And when it comes to the django database optimization, there is also very good documentation worth reading.

If you liked this article please share it further!