Robotics Hackathon in Bimanual Manipulation in Munich

How a LinkedIn Post Led Me to a Munich Basement with Millions of Euros Worth of Robotics Equipment

My LinkedIn feed has become a stream of robotics content over the past few months. As someone diving deep into AI robotics after years in ML/AI/RL, I’ve been deliberately connecting with people pushing the boundaries of the field. So when Nicolas Keller’s post about Munich being “the world’s best place to build robots” appeared in my feed, it immediately got my attention.

A bimanual manipulation hackathon in Munich, organized in just three weeks. How cool is that?

Here’s what they promised:

- Hands-on with dual-cobot humanoid upper-body setups, 2 Franka Emika Pandas, 2 depth cameras, 1 rgb, RTX5090 in each station

- Teleoperation with Meta Quest VR headsets

- Contributing to the MINGA research paper (with co-author potential)

- Collaborating in Munich’s growing robotics ecosystem

I had three weeks to apply, get accepted, and arrange travel during what turned out to be Oktoberfest (the Lederhosen on the robot in the picture should have been a hint! It just made the stay extra expensive).

I have recently missed the LeRobot’s worldwide hackathon and wanted to jump on the opportunity. The prospect of 30+ dual-arm robot setups, high-end GPUs, industry mentors and meeting other people passionate about robotic manipulation made it a no-brainer decision for me.

The hackathon experience

Most hackathons are short and they only involve software. Hardware Hackathons are much more rare, especially where hardware is provided and high end.

The organizers promised a lot - and I have to say that they over-delivered, even though they operated on a very short timeline! 3 weeks!

The hackathon wasn’t perfect; we hit some technical issues with the provided codebase and the robot controllers. The lab space (KI Fabrik in the Deutsches Museum) was full of amazing robots, powerful workstations, and a 3D workshop, but it also had its downsides - it was hot, humid and you could get trapped there due to the limited number of keys!

The schedule was focused on building: after one day of setup and tutorials, it was essentially “09:00–open ended — Building time” for six straight days. The main communication happened on Discord.

The setup was industrial-grade: 30+ Franka Emika Panda dual-arm configurations for about 40 participants. Each setup came with Meta Quest headsets running custom teleoperation software. There was a full workshop with 3D printing capabilities for custom grippers. The compute power was serious—workstations with the latest hardware that most of us don’t have access to.

The initial goal of the organizers was to attract local students (mostly from TUM), but the hackathon was just too attractive.

The organizers ran the selection process based on a Typeform where you had to justify your presence (CV, motivation, experience) and the final mix of people contained: PhD researchers, startup founders, industry engineers, and ambitious students. There was a significant number of people who traveled from other countries. Everyone wanted to be there.

Industry engagement and realistic use cases

The real differentiator was the industry backing. BMW and Siemens provided realistic challenges to be solved, explained the details, provided physical materials and sponsored prizes.

Additionally, there were helpful lectures and mentoring from Nvidia, Hugging Face LeRobot and KIT (a big German university).

When Sunday’s final presentations came, BMW and Siemens employes showed up to judge the results personally. This wasn’t academic theory - teams were working on problems that companies actually need solved, with the decision-makers accessible throughout and present for the final outcomes.

The main organizers were TUM and Poke & Wiggle. I was very impressed with both. TUM showed great support for students and entrepreneurship. Poke&Wiggle people pulled everything together from the technical side. They were staying late and even hosted some participants coming from abroad in their homes! They were testing their own software stack while building what they claimed would become the largest public bimanual manipulation dataset.

My strategy & Learning

Probably my favorite thing about this hackathon was that it was extremely collaborative. Yes, people tried to win, but I was able to learn both from my team as well as from others.

Team formation & collaboration

I came to the event without knowing anyone and needed to form a team. It was actually a pretty common experience, many people didn’t know who to pair with. I have been in setups like this before and that experience helped.

To form a good team you want the best people, but also it’s really hard to assess people very quickly and people who seem great at the start might not be able to give the best performance. E.g. they might not be fully available, you might not get along very well, etc.

So my strategy was to talk to most people, see how they think and how experienced they are. I was selecting for getting along, enthusiasm and general intelligence. It was less important to me that someone was inexperienced as long as they were energetic and open minded.

We weren’t the most effective or best organized, but we really enjoyed our time together, made good progress and learned a lot. Our team also shifted a bit during the 7 days (one person got sick and we adopted another one).

We used a WhatsApp group to share resources, set up a GitHub repo for shared scripts and shared some notes on a Google doc.

Our strategy was as follows:

- get familiar with the teleoperation

- trying out all available tasks and assess the task feasibility for the human operators

- train and deploy the models ASAP to test the pipeline (yes, we detected bugs and further limitations)

- pursue the most promising tasks, refine the dataset collection and experiment with the models for good performance

Learnings

We didn’t manage to win any categories. I think that my group was more focused on learning and experimentation, instead of purely competing to win.

Some takeaways:

- If the task can’t be done by the human, the robot won’t be able to do it

- Training loss during model training is not a good predictor of the real life performance

- Robot safety mechanisms were critical to avoid breaking the robot

- Evaluation in real life is risky and some simulation setup would be helpful

- End-effector control with inverse kinematics was often causing the robots to get stuck due to joint limits; it required special care during teleoperation for the demos so that the actual policy wouldn’t block the robot

- Two arms are much harder than one

- Dataset quality matters a lot (recovery examples, non-Markovian states are confusing, noise/operators in the setup)

What we tested

- 3 different task setups with hundred+ demonstrations each

- variations in model training (steps, parameters, models, action space) and dataset selection (recovery episodes ratio, bad episodes)

- different control modes (delta and absolute)

- we could only try actions in the EE space, the joint space controllers weren’t working correctly

- SMOLVLA and ACT models from Lerobot libraries

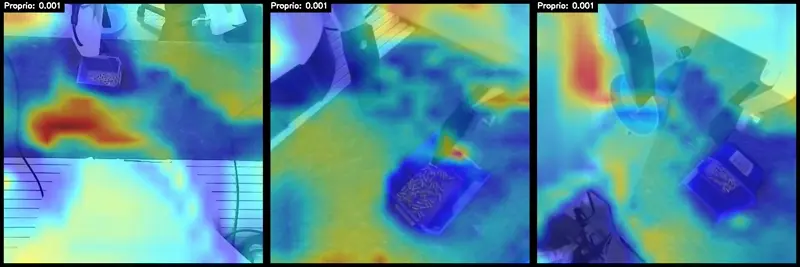

- performed experiments for generalization and resilience (e.g. messing with cameras was making robots much less effective) we also visualized attention maps for the ACT Models

Here is an example of an attention map:

(Kudos to physical AI Interpretability Repo - the author was there during the event and helped us a bit with the setup).

Misc

- I got a pretty good feel for teleoperation in VR (it’s hard though!)

- I managed to get the arms to crash with each other and got my robots stuck countless times

- I spent some time setting up a simulation environment with Panda in MuJoCo and playing with Isaac Sim (approach abandoned in the end)

- I read several papers recommended by other participants

- I wasn’t able to try Pi0/Pi0.5 as the ready snapshots are for the same type of robot (Panda), but in a very different action space and we didn’t have time/resources for fine-tuning from scratch (80GB+ GPU memory required)

What I wished I could do:

- try out Groot / Pi0.5

- simulation, sim-to-real, and RL fine-tuning for the trained VLA (a simple VLA would be perfect!)

Posts describing the experiences of some of my teammates: @Artur and @Andrea.

We Need More of This

After seven days of intense collaboration in a basement, we didn’t revolutionize the future of robotics, but we all learned a lot and everyone came back home more experienced and inspired.

It was a great event!

And I want to see more events like this in Europe, because the future of robotics doesn’t have to happen in SFO (or China)

Europe has the ingredients for world-class robotics innovation. We have strong engineering talent and strong reasons to invest (aging population)! I live in Poland now, a place that produces some of the world’s best software engineers, but the innovation is lacking. I talked to two universities in Warsaw and they don’t really innovate or even follow the current state of the art yet for embodied intelligence.

And it’s a shame, because with libraries such as LeRobot, open hardware, and open-source simulation engines, the space is now much more accessible.

I was very impressed with TUM and many of the students. I would like to support the ecosystem in Poland and Europe. Munich proved it’s possible. Let me know if you would like to help!

Next steps

I left the event pretty drained, but also very excited! JI am still following up on the various threads I started during the hackathon. I also started to look at another exciting challenge that is focused on household tasks in the simulation.

Currently I’m especially interested in the approaches combining VLAs with RL, like the ones outlined in the SimpleVLA paper and would love to participate in more hardware hackathons, ideally combining simulation and real world learning.