My deep dive into robotics: Part 1

This post is the first in a series documenting my journey into robotics. I’m writing these to reflect on my progress and strategy, but also to help or inspire anyone considering a similar leap.

If you’re curious about AI, building physical systems, or carving out your own path into deep tech—this one’s for you.

Why robotics & my background

I grew up on a farm, so I’m no stranger to tedious manual labor. I also happen to be very lazy when it comes to physical work - as a kid I would never want to help out in the farm or doing chores. I’m not proud of it, but that’s true! I’d always rather be learning or building something than repeating the same task over and over.

Why not make robots do the boring work?

I’ve long believed that robotics—especially embodied intelligence—has huge potential to reshape critical parts of our world: infrastructure, food systems, care work. Europe, where I am based is facing an aging crisis and labor is getting more and more expensive and that drives the cost of housing, food and services. But seriously, I don’t think this trend needs to continue. Cheap electricity from atom/renewables + robotics can make labor cheap again and drastically improve quality of life for everyone if things go right (Yes, I’m a techno-optimist for sure!).

I’ve also been deeply into reinforcement learning for years. I remember doing the University of Alberta RL specialization on Coursera and being struck by the elegance of the math and the philosophical depth of the agent paradigm. It felt personal—figuring out how to learn, how to explore, how to act in an uncertain world. I could relate, as a human.

This project came at a natural inflection point. I had just finished a startup accelerator, moved back to Poland, gained access to a physical workshop with my brother, and met someone who introduced me to the Lerobot ecosystem.

During the startup accelerator, I had a lot of time to ponder what type of business I would like to do. Robotics was definitely something I was interested in, but I didn’t have a competitive edge, due to lack of practical experience.

I wanted to do something technically deep and fun—and potentially startup-worthy. Robotics fit perfectly.

Lerobot ecosystem really picked my interest and made actual robotics seem much more approachable and accessible. State of the art models on commodity, open hardware? Lead by Hugginface (a great brand name in the ML community)? Sign me up!

What types of robotics I wanted to learn

I’m focused on manipulation—robot arms, gripping, interacting with the world. I’m less interested in mobile robots for now, and more excited by the intersection of learning-based control and practical use cases.

My application areas of interest were agriculture and construction. Both are high-impact and under-automated. Both also demand robustness and real-world intelligence—qualities modern AI might finally deliver.

Timeline and the plan

I love learning and I’ve always loved to learn on my own. As an experienced self-learner, I’ve learned that investing upfront in a good learning plan pays off massively.

A few years back I read Ultralearning by Scott Young, and one key takeaway stuck with me: people often spend too little time researching how to learn best, picking the resources and making a plan. If you are willing to spend hundreds of hours learning, pick wisely.

I am not a robotics expert, so figuring out a curriculum all by myself would be hard. I also have a pretty strong science/engineering/software background, so I didn’t want to follow something generic. So, what to do?

Well, good that we’ve got powerful LLMs to personalize our learning paths.

I used ChatGPT Deep Research to help me generate a 12-week roadmap. Using Deep Research was important, because it causes LLM to ask clarifying questions and scour the internet for you, saving potentially tens of hours of research and analysis.

The input: I had a background in software, ML and RL, was aiming for deep hands-on knowledge, had ~30 hours per week to spare, and wanted to explore robotics manipulation with an eye toward AgTech or construction startups.

I already have some background in RL and ML. I want to learn about state of the art robotics to start a company. I don’t want to do a degree. I have time and money though. I am going to build and experiment with Lerobot from hugging face soon (waiting for last parts to arrive). I have access to 3d printers and heavy workshop. Create me a 3 month learning roadmap, with project ideas, assuming 30 hours of effort available per week.

I know python and I have many years of experience in software engineering.

See the full chat history for the plan creation here.

The output was ambitious: a full-stack robotics journey mixing ROS2, LeRobot, reinforcement learning, imitation learning, simulation, hardware, and even startup-aligned thinking. It was crazy - but in a fun, exciting way.

I put the plan into google doc and I have shown it to some people. They didn’t have major concerns apart from “woah, really?” - but I’m used to reactions like this.

See the plan as an exported Google Doc.

Why 12 weeks? This is part of my regular planning, I usually plan daily, weekly and quarterly. Twelve weeks is long enough to make real progress — but short enough to keep urgency high.

Plan vs Reality

I created my plan on 16 May 2025. Now we have 25th July - we are 10 weeks in. Realistically, I focused maybe 7 weeks on robotics between other projects and travels.

So how did it go?

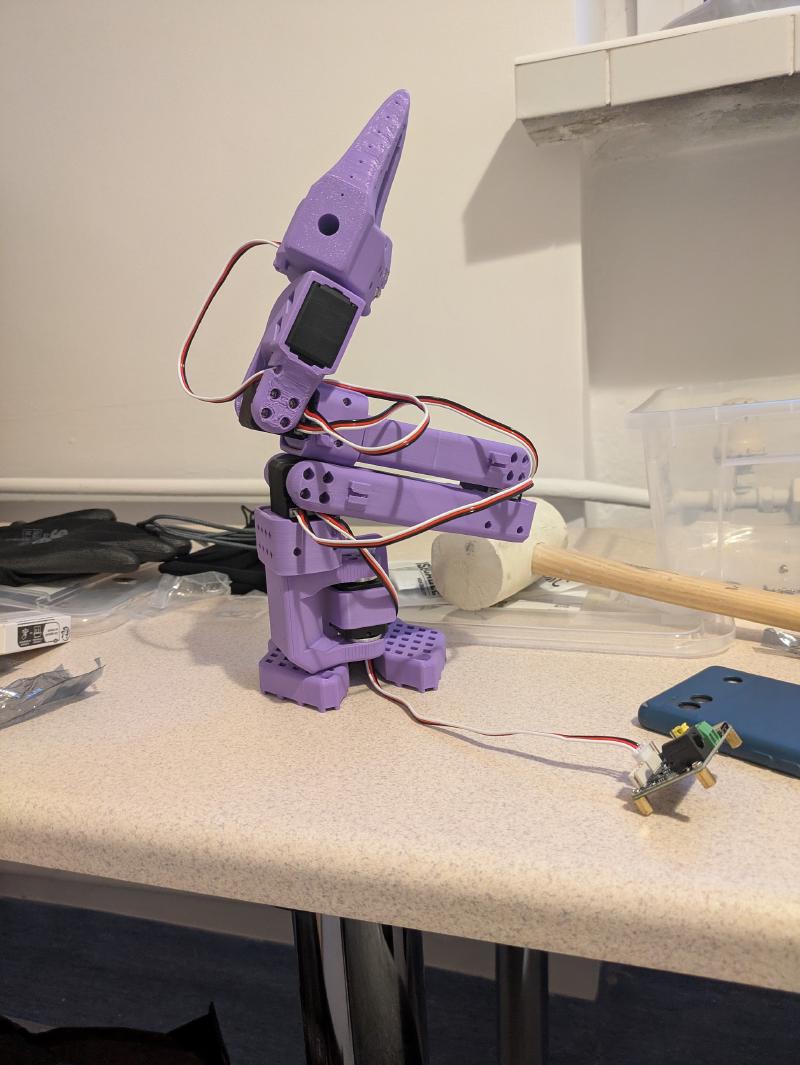

I started out following the roadmap pretty seriously. For the first two weeks, I was all in—spending ~30 hours a week, doing two full Udemy courses on ROS2 and manipulation, and diving deep into kinematics and motion planning. I even assembled the LeRobot SO101 arm around that time.

But I never treated the plan as a rigid calendar. I thought of the “weeks” more like themed modules—open to remixing, repeating, or extending. I also gave myself permission to insert breaks between phases. It was aggressive by design, but flexible in execution.

Some things I deliberately skipped or reframed. For example, I didn’t do the vision pipeline in week 4—OpenCV felt outdated for where I saw the field going. I was more interested in learning-based approaches to perception. Similarly, while I didn’t use ROS2 with my SO101 arm, I did learn how to integrate ROS2 and Arduino in earlier work with a janky robot from the course. The skill was there—I just chose not to apply it in that form.

After two solid weeks, I reached a point where I felt I had enough foundational understanding to start building something real. That was the real pivot point: I asked myself, “What would it take to actually do a project?” That question reshaped my trajectory.

Instead of following the rest of the roadmap linearly, I shifted into execution mode. I was craving for a longer duration specific project - and I made another plan, this time a project focused one - Laundry Folding Robot inspired by aloha. See it here.

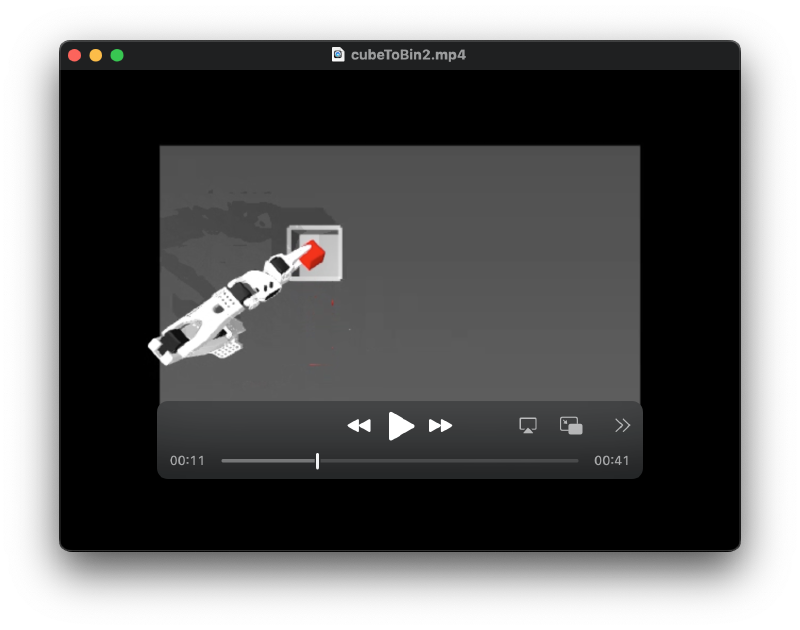

With my specific laundry folding robot in mind: I built a custom gym-style simulation matching the SO101 arm, and from there dove headfirst into reinforcement learning (mostly SAC), imitation learning (BC, GAIL, AIRL), diffusion policies, and VLA models like smolVLA and Pi0.

It didn’t match the tasks from the original plan — but at the same time, it was aligned with the curriculum I wanted to cover. The plan had 3 weeks fouced on RL, imitation and VLAs, I just did it from the point of a specific use case, instead of following the steps of the original plan.

Yup, and I managed to teach the robot how to throw the bin to the box.

(even though some of my teleoperation demonstrations were pretty poor - see here - it’s not easy to control a robot!)

Reviewing the original plan: I didn’t touch sensing, SLAM, or domain-specific deployment yet. I already had some experiences with sensing (courses in the past, etc), but it’s definitely an area to dive deeper into, especially when I get into mobile robots.

The remaining: “Week 7: Advanced Sensing and Actuation – Toward Field Deployment”, “Week 9: Sim-to-Real Transfer and Scaling Up”, “Week 10: Domain Focus – Agricultural and Construction Robotics Deep Dive” still seem very useful and interesting.

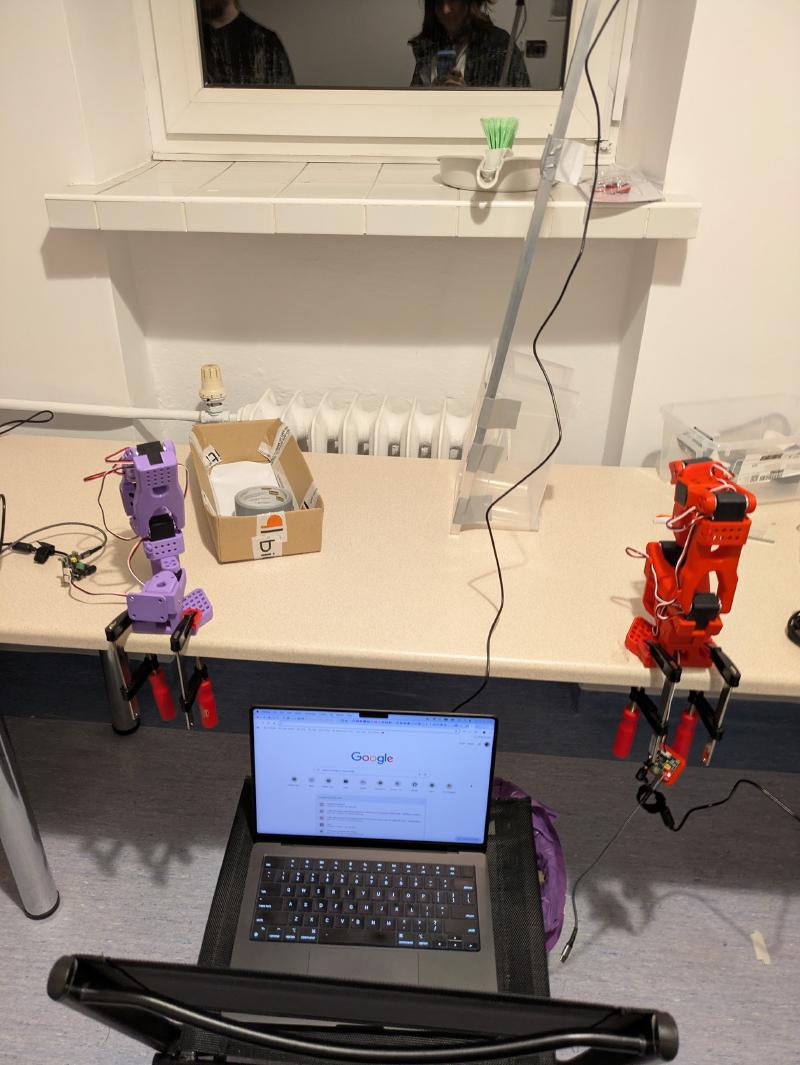

I did some data collections in real life, but my setup was super crappy:

(picture from before I invested in a tripod and more cameras)

The last week of the plan was meant to be spent on reflection and I’m doing it ahead of schedule since the execution has gone off plan, while still on track for the high level goals.

Talking to people

My official learning plan didn’t include speaking with others. But I’ve come to appreciate just how much easier — and more energizing — hard things become with the right people around.

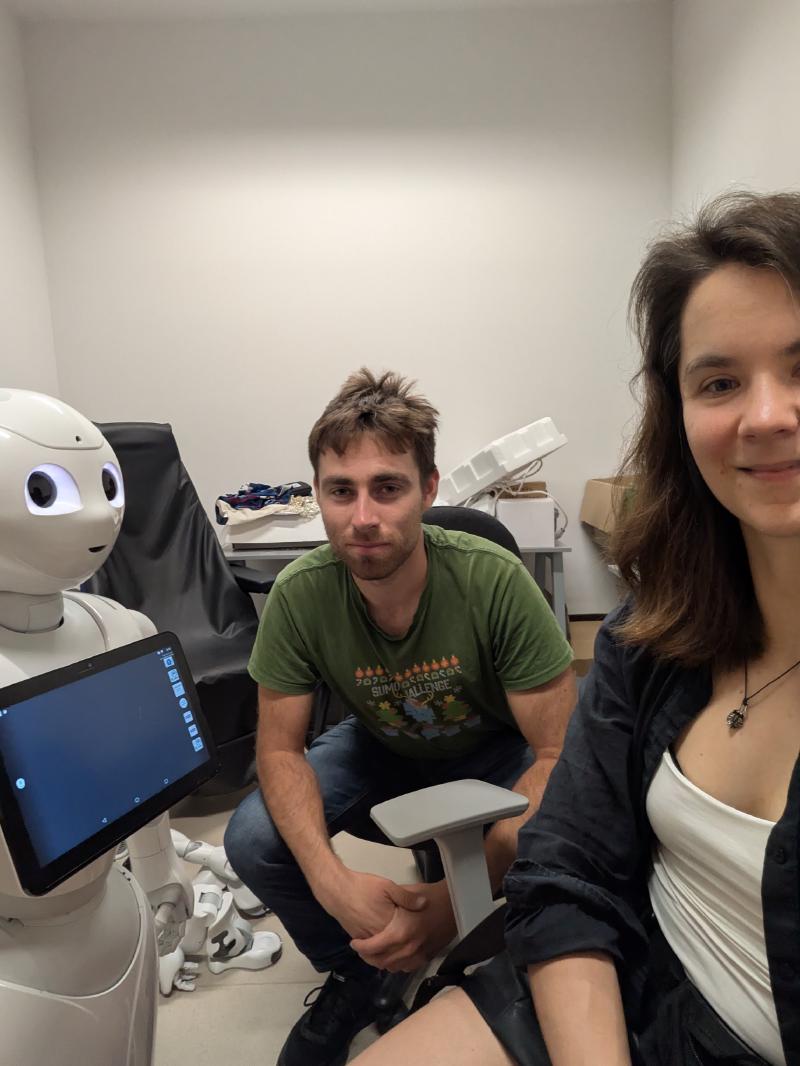

I visited two robotics labs in Warsaw and spoke with researchers there. One lab focused on swarm robotics and was led by someone with a clear entrepreneurial mindset. It was inspiring to see someone thinking about scalable systems and real-world impact. The other lab worked on human-robot interaction, especially with children — a domain I hadn’t seriously considered before, but one that sparked new curiosity.

Both labs invited me to collaborate and offered access to their robots. That kind of openness was encouraging. While neither was working directly with deep learning for robotics — the area I’m most drawn to — these conversations broadened my view of what robotics can be. They gave me strength and a sense of possibility. At one of the labs, I even reconnected with an old friend I hadn’t seen in over a decade. It was unexpectedly meaningful to find someone I knew already immersed in this space.

I also reached out to people at Warsaw-based robotics companies like nomagic.ai. So far, those connections haven’t led anywhere, but I’ll keep exploring. I missed the LeRobot worldwide hackathon, but joined the Discord and have been in occasional contact with the friend who introduced me to the platform.

I started this journey solo — with a roadmap, tools, and focus. But if I do pursue something deeptech, I know I’ll want collaborators. These early conversations reminded me that the right people can shift what feels possible.

Reflection on the learning

The roadmap was wildly ambitious. Some topics were PhD-level, and there’s no way to do justice to them all in three months. Having a tight schedule was helpful to prevent me from going to deep on any specific topic, it would be fun, but I didn’t want to lose the sight of the bigger picture.

Having a plan really helped, even if I didn’t stick to it strictly. It helped me to have a somewhat structured personalized learning plan.

The biggest takeaway: mixing structured learning with project-driven deep dives is incredibly effective. Courses gave me confidence; projects gave me momentum.

Some parts of the roadmap (like OpenCV pipelines or QA protocols) felt less relevant with how fast deep learning is progressing—but that’s fine. It’s all about strategic depth, not completeness.

Reflection on the plan

The 12-week structure gave me an early push, but I was always open to adjusting it. I didn’t treat the roadmap as a rigid calendar—it was more like a menu.

In retrospect, I think mixing theory and practice is hard to pre-plan. That’s where I deviated the most. But having the map made it easier to navigate.

What else from the plan do I plan to do?

I still want to:

- Build a basic vision pipeline + scripted action demo.

- Explore field deployment topics (SLAM, navigation).

- Learn more about sim-to-real strategies.

- Do a more integrated prototype + test cycle for a specific use case (e.g. fold my laundry!)

- Revisit AgTech and construction applications with more realism. Or pivot to another industry entirely (e.g. care sector).

Additionally I plan to:

- Make better demonstrations

- More good demos with real robots

- Build more robots - LeKiwi and Xlerobot are next!

- Instead of e2e VLAs also try out LLM + IK over MCP

- Invest in better simulation - either IsaacSIM or Maniskill

Using AI for learning / projects

I used AI extensively during this learning journey:

- it helped me crafting my plan and find the right resources to learn from

- explaining specific concepts

- drafting anki cards so I can remember the concepts better

- help troubleshooting installations

- help coding and ML training

- emotional support during RL training (seriously, it was needed, many models are prone to diverging) together with hyperparameter tuning

I used chatGPT, Claude, Gemini and VSCode with Copilot (powered mostly Claude Sonnet). I briefly tried gemini CLI, but this tool has much more to offer. I just scratched the surface.

I created a Project within chatGPT where I included my plans as project files and that helped a lot to focus the AI.

My project instructions:

You are a robotics/RL expert and an expert educator. You are my learning assistant. Think more coach than a teacher.

Be concise and do not come up with suggestions without understanding what is needed from you. Ask a lot of questions before suggesting anything or sharing an opinion. I want to think independently, help me with that. Any time you offer a suggestion, make sure to justify why it’s needed and why it’s better than alternative approaches.

Resources I recommend

- Lerobot - open hardware, tutorials, easy to access models and datasets - maybe a bit confusing and unstable at times, but amazing! Additionally they have a lively community!

- Reinforcement Learning Course - very approachable

- CS 285 Deep RL Course - not for the faint of heart, lots of SOTA techniques

- Modern Robotics Course - I would call it classic robotics in the context of my learning, but it covers a lot of useful fundamentals

- ROS2 Basics Course - was useful for understanding ROS2, URDF (how robots are described in software), Gazebo for simulation

- ROS2 Manipulators Course

Conclusions & tips

If you’re aiming for a deep-tech startup, playing the long game on learning is worth it.

- You need a mix of practical experience and theory to back it up

- Structured courses are the best way to acquire theoretical knowledge

- Practical projects are the best to keep motivated while learning a plethora of tools and approaches

- Plans are tools, not rules. Break them if you need to.

- Having a clear project goal helped me stay focused and curious.

- Being overwhelmed is normal. Keep going anyway. Use friends and Claude for emotional support.

- Buying in literally by spending money on hardware made it much more real

Next up: in Part 2, I’ll dive into my current setup, I will share my simulation env and scripts I used to integrate with:

- mujoco / gym

- stablebaselines3 (RL)

- imitation (IL)

- lerobot (Diffusion, ACT, VLAs)

including training, teleoperation, storing/uploading datasets in multiple formats and eval. I’m happy to share my code in help that it helps people with integrations as these tools can be overwhelming.